Lately a lot realtime graphics research has been focused around signal noise – and more specifically, adding noise (by skipping samples) in specific ways so it can be removed again later passes. Though it isn’t really new, it seems to have become particularly important lately.

There’s a couple of good examples in open source

- AMD’s denoiser and screenspace reflections from GPUOpen: https://github.com/GPUOpen-Effects/FidelityFX-Denoiser

- Ray traced far-field AO from the DirectX samples: https://github.com/microsoft/DirectX-Graphics-Samples/tree/master/Samples/Desktop/D3D12Raytracing/src/D3D12RaytracingRealTimeDenoisedAmbientOcclusion

I won’t go into huge detail here, but just collect my thoughts and some observations I’ve made while looking at them and also talk about the ambient occlusion approach described in “Practical Realtime Strategies for Accurate Indirect Occlusion” by Jimenez, Wu, Pesce & Jarabo.

AMD’s screen space reflections

AMD’s SSR attempts to add a BRDF based filter to the reflections it finds in screenspace. Standard SSR assumes no microfacets on the reflecting surface – as if everything was a perfect mirror reflection. This is usually countered by doing the SSR in downsampled resolution and then blurring everything in XY afterwards. The result looks surprisingly acceptible, but from a PBR point of view, it’s as if we’ve given everything a constant roughness value and some wierd screen aligned BRDF.

AMD combines this with the “importance sampling” technique that was sometimes used with cubemaps before we started to prefer “split-sum” style approaches. The idea here is to use a distribution of normals on the reflecting surface, and sample a separate reflection for each one. We can distribute the normals according to microfacet equations such as GTR, etc, which can take in a roughness value and give us an accurate emulation of a real BRDF (more on this below).

However, to get a smooth result reliably, we will need hundreds if not thousands of samples, even if those samples are distributed intelligently. So our goal is to try to find a way to reduce the number of samples required from hundreds per pixel down to somewhere between one quarter to one.

We do this by assuming that samples for pixels near each other will be similar; and distribute the set of normals we want to test across nearby pixels in XY. Furthermore, we assume that samples from previous frames are likely to be similar to samples from the current frame and also distribute normals across frames. Later we do filtering in space and time to effectively find the combined result of all of these samples.

Reducing the number of samples per pixel is the function that adds noise to the system and then we do a few screen space filters to attempt to remove this noise again. If those two functions are balanced well against each other, the result can be pretty surprising.

Generating samples

AMD uses blue noise described in https://eheitzresearch.wordpress.com/762-2/ (Eric Heitz et al) to generate the distribution of normals for the reflection. It looks like this could be further improved by applying importance sampling techniques (https://developer.nvidia.com/gpugems/gpugems3/part-iii-rendering/chapter-20-gpu-based-importance-sampling) to weight for the BRDF. This uses some small lookup tables (though it looks like AMD’s only using a fraction of the total lookup buffer, if you use this, I recommend looking for optimizations there). These parts of the solution are part of the “sample” rather than the library itself and so AMD may be expecting that most users already have these kinds of things worked out.

For some of the techniques we use later, we want the normals to be distributed in time in roughly the same way that they are distributed in space, and indeed it looks like they will be with this approach. AMD adjusts the number of samples per pixel from 1/4 to 1 based on results from previous frames. Interestingly it does this based on temporal but not spatial variance. I’m not sure if there’s a particular advantage to this, because it looks like the shaders should have access to both variance values.

Filtering

AMD then runs a spatial filter before a temporal filter; each in separate shaders. The spatial filter works in 8x8 tiles, and loads a 16x16 area of the framebuffer into groupshared memory for each tile. For each pixel, 16 nearby pixels are sampled – this time using a different time of blue noise, based on a small Halton sequence. That sequence is chequerboarded, but otherwise unchanging. So, even though we have a 16x16 region of pixels available, each individual pixel is only actually influenced by 16 spatially neighbouring pixels. The Halton sequence here is also worth watching, because that pattern can become visible in the final result, unless it is obscurred by other noise. Particularly when we’re using a higher quality blue noise earlier in the pipeline, this pass can counteract that. I’ve seen this create some banding patterns, but wouldn’t be surprised to see it create some patterns repeating in screenspace. On the other hand, it also helps reduce outliers (relative to sampling every pixel in an 8x8 block).

Pixel contributions are weighted the compatibility of their depth and normal (but not roughness). The way the depth weighting works here may cause great noise on surface inclined to the camera; which may not be great for reflections specifically due to fresnel. The weighing is also pretty expensive, with one transcendental function for each depth and normal per sample. It makes me wonder if a cheaper “empirically good enough” weighting but with more influencing pixels might be better in some cases.

Temporal filtering happens afterwards, and works on the samples after spatial filtering (ie, the inputs to the temporal filter are already somewhat de-noised). Temporal filtering only works with 2 input samples: the new “today” sample and the accumulation of previous filtering (“yesterday” sample). We weight the compatibility of the yesterday sample by depth, normal and this time roughness as well.

We also clamp the “yesterday” value to “today’s” local spatial neighbourhood (this time sampling a 3x3 block of nearby values). This virtually eliminates ghosting; but I’ve seen it contributing to a strobing effect. The strobing effect can be quite apparent and distracting; I’m not sure if it’s caused by a lack of samples in the spatial filtering, too few samples in our neighbourhood, or just tolerances being too tight.

AMD then also runs a final blur shader on the final result. This may be because the sample combines “mirror” reflections with “glossy” reflections. The mirror reflections the more traditional SSR approach, which may need a blur as a final fixup. But for the glossy reflections, this blur isn’t ideal. We actually want the blurriness to come as a result of the normal distribution to correctly reproduce the BRDF. Furthermore the blur is very similar to the spatial denoise filter. We have a smaller 5x5 kernel, but this time weight based on roughness and XY distance.

This final blur may be a little more memory bound than the other filters, but still seems fairly expensive. It makes we wonder if it might be better to remove the blur and increase the number of samples in favour of more samples for the spatial noise, and perhaps use a different approach for mirror reflections.

Tile classification

SSR approaches are often combined with some kind of “classification” pass, which will filter out pixels that don’t need reflection information. Here, AMD just generates a list of XY values in a big table for pixels to run reflection samples on. This approach seem pretty powerful, and there may be room for further improvements here – for example, by including more for greater variation in the number of samples per pixel (eg, from variance or roughness) or separating mirror and glossy reflections to different lists.

There’s also might be some way to combine writing this classification list with a pixel shader for the actual geometry. That might allow for turning on flags such as anisotrophy. That might run into complications with generating the “indirect args” buffer they system needs, though.

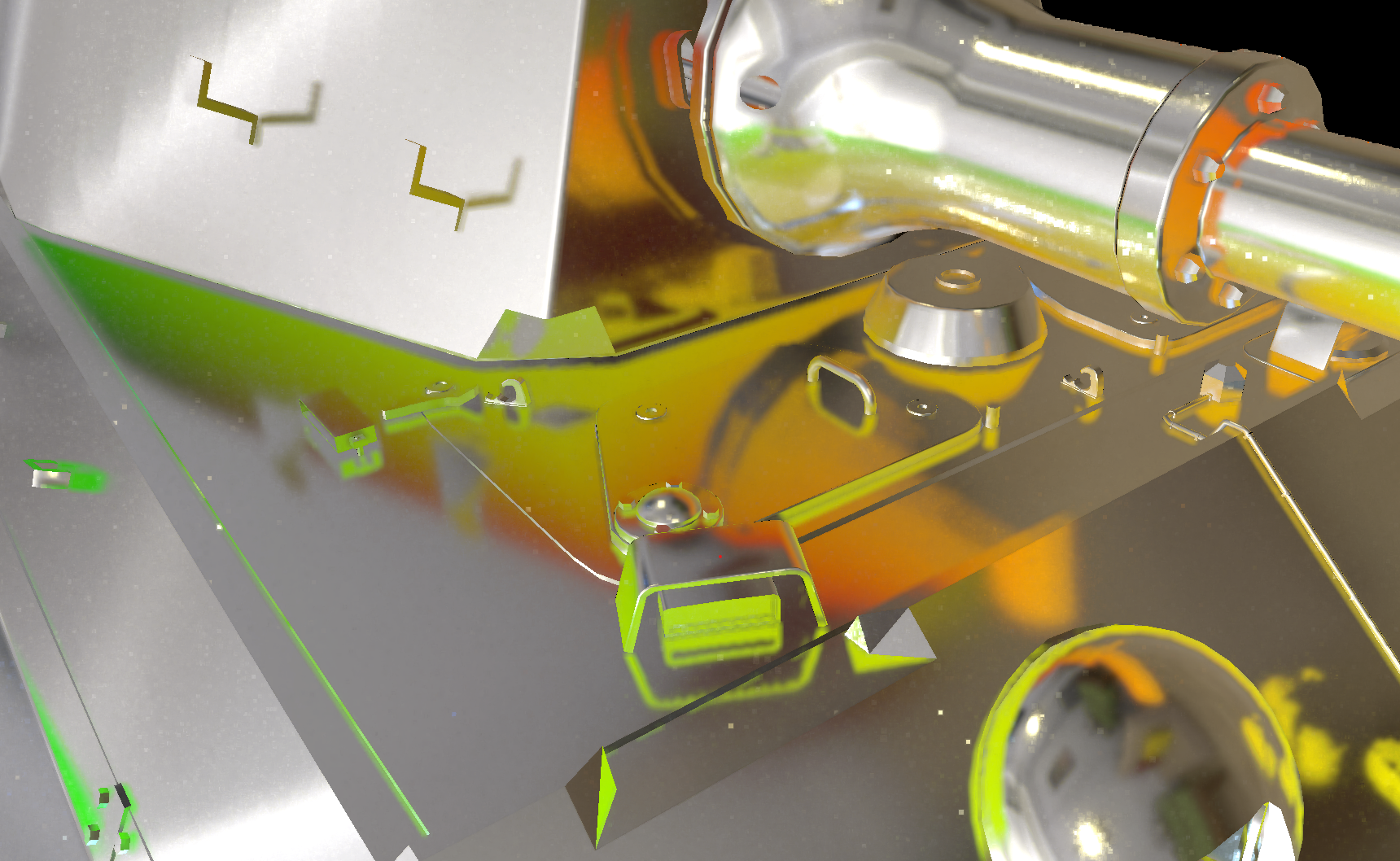

GGX screenspace reflections

Here’s a screen shot from this technique with a few of the tweaks suggested here. This is a medium-roughness metallic material, the screenspace reflections are in red & green (ie it’s not reflecting the proper color, just a red/green gradient), and there’s also a skybox reflecting. This uses the GGX equations and all blurring is coming just from the distribution of normals and from the BRDF itself (there’s no final screenspace blur). Though I’m not using the code from the AMD sample for this, I’ve replaced their approach with something I believe might be a little more optimal.

The skybox reflections are coming from a full resolution skybox; there’s no split-sum prefiltering or anything like that. It’s just the sampling a highres skybox the same way it samples reflections in screenspace.

This is from a still camera, but it also looks pretty nice in motion (apart from the speckles I’ll mention in a bit). I’m using g_temporal_variance_guided_tracing_enabled=1 & g_samples_per_quad=1, which should give between 1/4 and 1 samples per pixel based on the amount of variance.

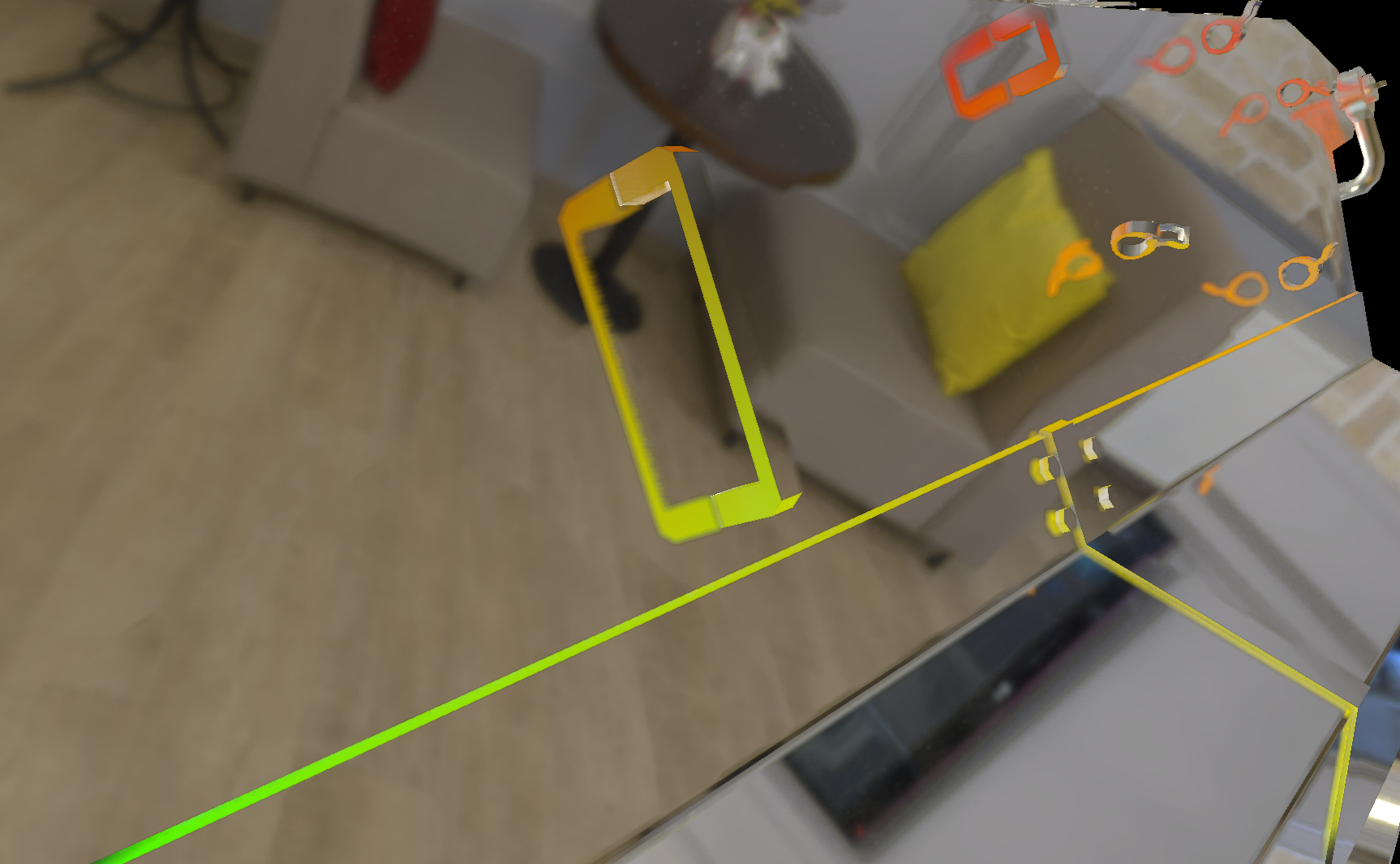

Here’s another couple of shots showing exactly how much blurring you can achieve through this approach:

low roughness material medium roughness material

This example really shows exactly how filtered the reflections can really get. If this was just a straightforward blur kernel, we’d need hundreds of samples. Indeed, for the IBL prefiltering I use thousands upon thousands of samples – and that’s essentially the same thing! But somehow we can get away with a spatially and temporal stable image with less than a sample per pixel. It’s seems kind of magic to me; arrising somehow out of the crazy statistics of spatial and temporal coherency.

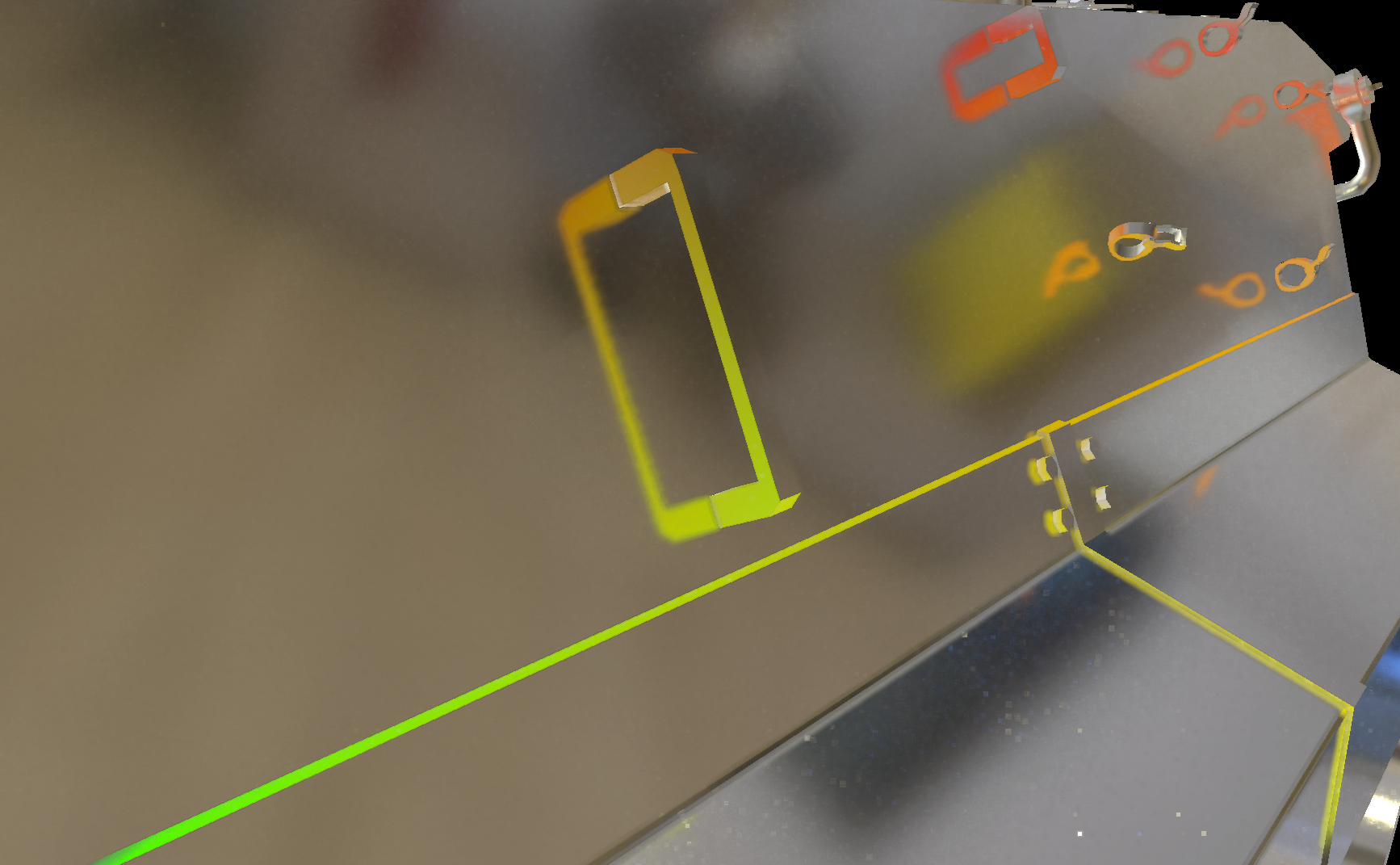

You may notice some slight continuities in the reflection. That’s not the filtering, that’s actually the resolution of vertex positions and normals causing a flat surface to be very slightly bent. Our sampling is actually precise enough to highlight it (whereas it might be hidden in simplier lighting models). Here’s a clearer shot showing the edges between triangles causing aslight continuity of the slope of the surface:

This approach should be able to handle some aspects of the BRDF that are lost from split-sum and some other table-based approaches (for example, stretching the reflection anisotrophically). We can also happily use any BRDF for it, there’s no special requirements. So it can be easily adapted to match lighting from another approach, for example. It can also be combined with dynamically rendered cubemaps; since there’s no prefiltering. Alternatively, you could do this in a reverse way and filter dynamically generated cubemaps over multiple frames.

However, it’s kind of expensive right now. I haven’t optimized my changes and the original AMD code is also a little expensive. I feel like it would be possible for ps5-gen consoles; but it would be expensive enough to require giving something else up (if that makes any sense). It’s also possible that a lot of this can be repurposed with ray tracing in mind (but I’ll touch on that when talking about the DirectX sample AO approach later)

There’s also a couple of artifacts you may have noticed:

- in these screenshots, I’m using an importance-sampling type approach that will distribution of normals to prioritize high variance parts of the GGX equation. However it’s not accounting for variance in the incident light we’re reflecting. In this case, there are small bright part of the skybox which can be a hundred times brighter than the surrounding pixels. This means they can sometimes be picked up by the sampling when you really don’t want them to be and they will overpower other samples. This approach, even with all of it’s statically majesty, can’t really deal with these kinds of outlier samples. Maybe there’s some more clamping or something else that can be done, I haven’t looked too deeply into it. You can see the problem in the bright speckles in the screenshots. These speckles aren’t temporally stable and really need some kind of fix

- at low roughness values, the colors look like they are getting quantized. This might be a result of passing radiance values through 10-bit and 16-bit precision conversions, or some other side effect of all of this filtering

Next time

Playing around with this has given me a sense of just how powerful this idea of adding noise and filtering it our later can be. When you see just how blurry the reflections can be, it’s kind of crazy that this is coming from so few samples.

There’s more I can cover, but this has already gotten pretty long; so I’ll stop for now and try to come back with some thoughts on the other approaches I mentioned later.